Look, I'll be straight with you if you've ever found yourself manually copying business information from Google Maps at 2 AM, questioning your life choices, you're not alone. I've been there, bleary-eyed and defeated, wondering why there isn't a better way to extract all that juicy business data sitting right there on the screen.

Here's the thing: there is a better way. Actually, there are about twenty better ways, ranging from sleek no-code tools to sophisticated Python scripts that make you feel like a tech wizard. Whether you're hunting for leads, building local SEO strategies, or conducting market research, scraping Google Maps can transform hours of tedious work into minutes of automated brilliance.

In this guide, I'm walking you through everything—the legal stuff (yes, we need to talk about it), the tools that actually work, and the techniques that won't get you blocked faster than you can say "CAPTCHA." Let's dive in.

What Exactly Is Google Maps Scraping?

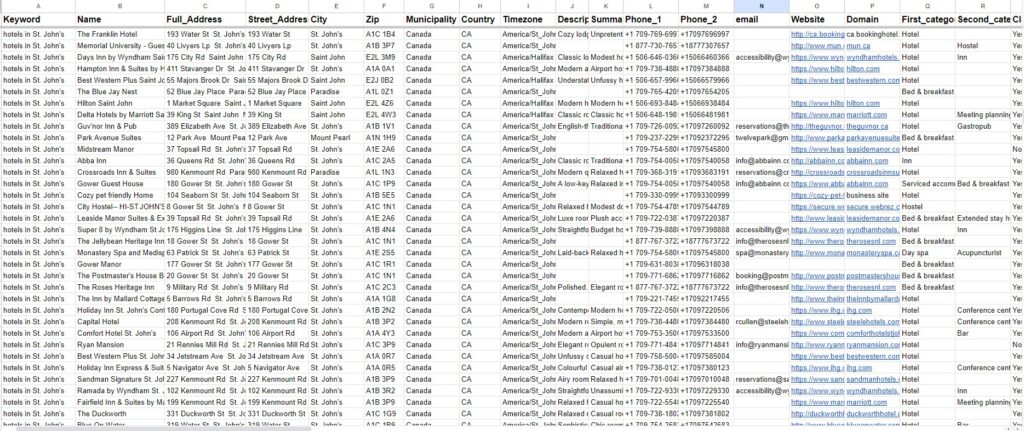

Before we get into the nitty-gritty, let's establish what we're actually talking about here. Google Maps scraping is the process of extracting publicly available business data from Google Maps listings. We're talking names, addresses, phone numbers, websites, reviews, ratings, operating hours—basically everything you see when you search for "coffee shops near me."

Think of it as automated data collection. Instead of manually visiting each business listing and copying information into a spreadsheet (shudder), you use tools or scripts to gather that data systematically. It's like having a really efficient, tireless assistant who never needs coffee breaks.

The data you can extract includes:

- Business names and categories

- Complete addresses and coordinates

- Phone numbers and websites

- Email addresses (when available)

- Customer reviews and ratings

- Operating hours

- Photos and descriptions

- Social media links

This information is gold for digital marketers, sales professionals, lead generators, SEO specialists, and anyone who needs comprehensive local business intelligence.

Is It Legal to Scrape Google Maps Data?

Okay, let's address the elephant in the room—the legal question that keeps people up at night.

The short answer? It's complicated. The long answer? Still complicated, but with nuance.

Google's Terms of Service explicitly prohibit automated access to their services, including Google Maps. However—and this is a big however—courts have increasingly ruled that scraping publicly available data doesn't violate computer fraud laws. The landmark hiQ Labs v. LinkedIn case established important precedents regarding access to public information.

Here's my take: you're working with publicly visible data that businesses have chosen to display. You're not hacking into private databases or stealing credit card numbers. That said, you need to be smart about it:

The responsible approach:

- Respect robots.txt files and rate limits

- Don't overwhelm Google's servers with requests

- Use the data ethically—no spam, no harassment

- Consider using official APIs when possible for commercial projects

- Add appropriate delays between requests

- Be transparent about your data collection methods

The reality check:

Many businesses successfully scrape Google Maps for legitimate purposes like lead generation, market research, and competitive analysis. The key is doing it responsibly and understanding the risks. If you're building a major commercial operation, consult with a lawyer. If you're a freelancer gathering leads for outreach, you're probably fine—just don't be reckless.

Why Scrape Google Maps? (The Business Case)

You might be wondering, "Why go through all this trouble?" Fair question. Let me paint you a picture.

Imagine you're a digital marketing agency specializing in restaurants. You want to offer your services to Italian restaurants in Chicago that have fewer than 100 reviews (they're still growing and need help). Manually, this would take days. With scraping? About fifteen minutes.

Real-world applications:

Lead Generation: Build targeted lists of potential clients based on location, industry, and rating. Sales teams love this because it's specific, current, and actionable.

Market Research: Understand the competitive landscape. How many competitors are in a specific area? What are their ratings? What are customers saying in reviews?

Local SEO: Analyze how businesses rank for specific keywords, identify gaps in the market, and optimize your clients' listings based on successful competitors.

Data Enrichment: Supplement your existing customer database with additional contact information, reviews, and business details.

Competitive Intelligence: Track competitor locations, expansion patterns, pricing mentions in reviews, and customer sentiment.

I once worked with a solar panel installer who used Google Maps scraping to identify homeowners' associations and property management companies in sunny states. They filtered by location, verified contact details, and built a targeted outreach campaign that tripled their B2B sales pipeline. That's the power of smart data extraction.

Methods to Scrape Google Maps (From Easy to Advanced)

Alright, let's get practical. Here are your options, ranked from "my grandma could do this" to "I speak fluent Python."

No-Code Tools (Perfect for Beginners)

If you break out in hives at the sight of code, these visual tools are your best friends.

Outscraper stands out as the most beginner-friendly option. Their free tier lets you test the waters, and the interface is refreshingly simple—enter keywords, select locations, click scrape, download CSV. Done. They even extract email addresses and phone numbers automatically, which is clutch for lead generation.

Octoparse comes with pre-built Google Maps templates. You literally point and click at the data you want, and it figures out the rest. Their cloud execution means you can close your laptop and let it run in the background. Perfect for bulk scraping Google Maps locations without babysitting the process.

Leads Sniper, offers an array of web scraping tools for Google Maps, Google Search, Yellow Pages, and domains empowering businesses to scrape valuable leads and essential contact information like emails and more.

Chrome Extensions (Quick and Dirty)

For quick jobs, Chrome extensions are surprisingly effective.

Map Lead Scraper sits in your browser and extracts data as you browse. It's ideal for small-scale projects—think 50-100 leads rather than 5,000. The monthly pricing is reasonable, and setup takes about three minutes.

G Maps Extractor offers both free and paid versions. The free version has limitations, but it's genuinely useful for testing concepts or gathering small datasets. The keyword generator helps you discover search terms you hadn't considered.

API-Based Solutions (Developer-Friendly)

If you're comfortable with code or working with developers, APIs offer the most control and reliability.

Bright Data's Google Maps Scraper is enterprise-grade stuff. We're talking proxy rotation, CAPTCHA solving, and the ability to handle massive scale. It's pricey, but for large lead gen operations, it's worth every penny. Their infrastructure is bulletproof—I've run concurrent requests without a single failure.

Oxylabs excels at precise data parsing. Their JavaScript rendering handles dynamic content flawlessly, which is crucial because Google Maps loads data asynchronously. For competitive pricing intelligence, this is my go-to.

Scrapingdog deserves special mention for speed. They achieved a 100% success rate in recent benchmarks, and at $0.00033 per request, it's economical for high-volume projects. Plus, they offer 1,000 free credits to test Maps data pulls.

Python Scripts (Maximum Flexibility)

For the technically inclined, Python with Selenium offers complete customization. You control every aspect of the scraping process.

How to Scrape Google Maps with Python and Selenium

Let me walk you through the Python approach because it's powerful, free, and teaches you valuable skills.

What you'll need:

- Python 3.8 or higher installed

- Selenium library

- Chrome Driver (matches your Chrome version)

- Basic Python knowledge (or willingness to learn)

The concept: Selenium automates a real browser, mimicking human behavior. It navigates to Google Maps, performs searches, scrolls through results, and extracts data—just like you would manually, but faster and without the soul-crushing boredom.

Basic workflow:

- Set up your Python environment and install Selenium

- Configure Chrome Driver and browser options

- Navigate to Google Maps with your search query

- Wait for results to load (crucial for dynamic content)

- Scroll through listings to trigger lazy-loading

- Extract data using CSS selectors or XPath

- Store results in structured format (CSV, JSON, database)

- Implement delays and error handling to avoid blocks

Pro tips from experience:

Use random delays between actions—humans don't click at perfectly regular intervals. Range your waits between 2-5 seconds for natural behavior.

Rotate user agents to appear as different browsers. Google's anti-bot systems look for patterns, so mixing Chrome, Firefox, and Safari user agents helps.

Handle pagination carefully. Google Maps doesn't use traditional page numbers—you'll need to scroll to the bottom of results to load more listings.

Implement try-except blocks liberally. Networks hiccup, elements change position, stuff breaks. Graceful error handling keeps your script running.

Common pitfall: Don't try to scrape 10,000 listings in one session. Break it into smaller batches over time. Patience beats getting your IP blocked.

Can I Scrape Google Maps Without Using the API?

Absolutely, and honestly, most people do.

Google's official APIs (Places API, Maps JavaScript API) are legitimate and supported, but they come with costs and rate limits. The Places API, for example, charges per request after your free tier, and those charges add up quickly for large-scale projects.

Non-API methods:

Web scraping directly from the Google Maps interface using tools like Selenium, Puppeteer, or no-code scrapers. This accesses the same public data you see in your browser.

SERP APIs like SerpAPI or SearchAPI query Google Maps through search result endpoints. They handle the technical complexity while you focus on data processing.

Chrome extensions extract visible data from your browser session without making separate API calls.

The trade-off? API methods are more stable and officially supported, while scraping methods require more maintenance when Google updates their interface. However, for most lead generation and research purposes, non-API scraping is more cost-effective and flexible.

I've run projects both ways. For client work requiring absolute reliability, I use APIs. For my own prospecting and research, I scrape directly and save the API costs for other tools.

What Data Fields Can Be Extracted from Google Maps?

The depth of information available is honestly impressive. Here's what you can realistically extract:

Core Business Information:

- Business name

- Full address (street, city, state, ZIP, country)

- Geographic coordinates (latitude/longitude)

- Phone number

- Website URL

- Business category/type

Contact and Social:

- Email addresses (when displayed)

- Social media profiles (Facebook, Instagram, Twitter links)

- Booking or reservation links

Customer Intelligence:

- Overall rating (out of 5 stars)

- Total number of reviews

- Individual review text and ratings

- Reviewer names and profile info

- Review dates and response times

- Photos uploaded by customers

Operational Details:

- Operating hours (daily schedule)

- Busy times and visit duration

- Accessibility information

- Amenities and features

- Price range indicators

- Popular times graph data

Advanced Data:

- Place ID (unique Google identifier)

- Plus codes

- Menu items and pricing (for restaurants)

- Service offerings

- FAQs and Q&A sections

Not every listing contains all fields—it depends on what the business owner has provided and what customers have contributed. But with the right scraper, you can capture everything that's publicly visible.

How to Avoid Getting Blocked While Scraping Google Maps

This is where amateurs become pros. Getting blocked is frustrating and wastes time. Here's how to fly under the radar.

Use Residential Proxies

Datacenter proxies are cheap but obvious. Google knows these IP ranges and flags them quickly. Residential proxies route your requests through real residential IP addresses, making your traffic look like genuine users browsing from home.

Services like Bright Data, Oxylabs, and Froxy offer proxy pools specifically optimized for Google scraping. Yes, they're more expensive, but they work.

Rotate Everything

Rotate IP addresses every 10-20 requests. Rotate user agents randomly. Even rotate your request headers to mimic different browsers and devices. Predictable patterns are your enemy.

Respect Rate Limits

Don't hammer Google's servers. Implement delays between requests—3 to 7 seconds is reasonable. For large-scale scraping, distribute requests over hours or days rather than trying to grab everything in minutes.

Mimic Human Behavior

Real users don't open 50 tabs simultaneously. They scroll, pause, occasionally backtrack. Your scraper should do the same. Random mouse movements, scroll patterns, and variable timing make you look human.

Handle CAPTCHAs Gracefully

You'll encounter CAPTCHAs eventually. Services like 2Captcha or Anti-Captcha solve them automatically for a small fee. Alternatively, slow down your scraping when you hit one—aggressive behavior triggers more challenges.

Use Sessions and Cookies

Maintain browser sessions like a real user. Accept cookies, store them, and reuse them across requests. This makes your activity appear consistent rather than coming from random, anonymous sources.

Monitor and Adapt

Watch your success rates. If you're getting blocked or seeing more CAPTCHAs, you're going too fast. Scale back, adjust your approach, and try again.

I learned this the hard way when I first started—blasted through 5,000 requests in an hour and got my entire IP range blocked for 24 hours. Slow and steady absolutely wins this race.

How Much Does Google Maps Scraping Cost?

The pricing landscape is all over the place, so let me give you realistic expectations.

Free Options:

Several tools offer limited free tiers—Outscraper gives you a handful of credits monthly, G Maps Extractor has a basic free version, and SerpAPI includes free searches. These are great for testing or very small projects (under 100 records).

Budget Range ($20-$100/month):

Most no-code tools fall here. Octoparse starts around $75/month, Map Lead Scraper runs $30-40/month, and Scrap.io offers competitive pricing with transparent limits. This tier suits freelancers and small agencies doing regular lead generation.

Mid-Range ($100-$500/month):

Professional tools like Outscraper's premium plans, ProWebScraper subscriptions, and entry-level API services. You get higher limits, better support, and more reliable infrastructure. This is where serious marketers live.

Enterprise ($500+/month):

Bright Data, Oxylabs, and Zyte API operate in this space. You're paying for guaranteed uptime, dedicated support, unlimited scaling, and advanced features like auto-CAPTCHA solving and premium proxy pools. Large agencies and corporations justify these costs easily.

API-Based Pricing:

Pay-per-request models vary wildly. Scrapingdog charges $0.00033 per request (extremely competitive), while others charge $0.001-$0.01 depending on data depth. For 10,000 records, you're looking at anywhere from $3 to $100.

DIY Python Route:

Technically free if you write your own script, but factor in development time, proxy costs ($50-200/month for quality residential proxies), and potential CAPTCHA solving fees ($1-3 per thousand solves).

My recommendation: Start with Leads Sniper to test your concept and validate the data quality. Once you know it works, upgrade to a paid plan that matches your volume needs. Don't over-buy capacity you won't use.

Can I Export Scraped Google Maps Data to CSV or Excel?

Yes, and this is honestly one of the best parts—most modern scrapers make exporting trivially easy.

Standard export formats:

- CSV (Comma-Separated Values) – Universal compatibility, opens in Excel, Google Sheets, any CRM

- Excel (.xlsx) – Formatted spreadsheets with multiple sheets if needed

- JSON – For developers integrating with apps or databases

- XML – Less common but supported by enterprise tools

What good exports include:

Proper headers for each column, consistent data formatting (phone numbers, addresses), UTF-8 encoding for international characters, and no weird artifacts or broken fields.

Tools like Leads Sniper, Octoparse, and ProWebScraper nail this. Click "Export," choose CSV, and download. Two minutes later, you're importing into your CRM or analyzing in Excel.

Integration possibilities:

Many platforms offer direct integrations. Zapier connections push data automatically to Google Sheets, Airtable, HubSpot, or Salesforce. API-based tools can pipe data directly into your database without manual exports.

I regularly export to CSV, clean the data in Google Sheets (remove duplicates, standardize formatting), then import to my email outreach tool. The entire workflow from scrape to first email takes under an hour for hundreds of leads.

How to Scrape Google Maps Reviews at Scale

Reviews are gold mines of customer insight—sentiment analysis, competitive intelligence, service quality indicators. Scraping them at scale requires specific approaches.

The challenge: Google loads reviews dynamically and limits initial display. You need to trigger "scroll to load more," handle expandable review text, and capture metadata like reviewer info and response dates.

Recommended tools:

Outscraper's Review Scraper handles the heavy lifting automatically. Specify a place or search query, set your review limit (10, 100, 1000+), and let it run. It captures full review text, ratings, dates, reviewer names, and business responses.

Apify's Google Maps Scraper excels at review extraction with customizable depth. Their actor-based system lets you configure pagination, filtering, and export formats.

Leads Sniper gives you complete control over the tool. With the lifetime subscription, you will get unlimited leads with review.

Best practices:

Start with a smaller review count to test your setup. Scraping 10,000 reviews from one listing takes time and can trigger blocks if you're too aggressive.

Export reviews with timestamps—this lets you track trends and identify recent changes in customer sentiment.

Use sentiment analysis tools after scraping. Services like MonkeyLearn or native Python libraries can categorize reviews as positive, neutral, or negative, giving you actionable insights without reading thousands of comments.

I once helped a restaurant group analyze competitor reviews in their market. We scraped 50,000+ reviews, ran sentiment analysis, and identified the top 10 complaint categories across competitors. They addressed those issues proactively in their own restaurants and saw satisfaction scores jump 18% in three months.

What Proxies Are Needed for Google Maps Scraping?

Proxies are your invisibility cloak. Without them, you're scraping with your real IP address—which Google will block faster than you can say "403 Forbidden."

Types of proxies:

Residential Proxies – These route through real residential internet connections. They're the most legitimate-looking and hardest for Google to detect. Premium services like Bright Data, Oxylabs, and Smartproxy offer millions of residential IPs across hundreds of countries.

Datacenter Proxies – Cheaper and faster but easier to detect. They come from data centers rather than homes. Useful for lower-scale scraping but not ideal for Google Maps.

Mobile Proxies – Route through mobile carrier networks (4G/5G). Extremely hard to block because they use the same infrastructure as legitimate mobile users. More expensive but worth it for critical projects.

Rotating Proxies – Automatically switch IP addresses after each request or time interval. This prevents any single IP from making too many requests.

Geographic targeting:

If you're scraping US-based businesses, use US proxies. Scraping Australian listings? Use Australian IPs. Location mismatches raise red flags.

Cost expectations:

Residential proxies run $5-15 per GB, which translates to thousands of requests depending on data volume. Mobile proxies are pricier at $80-300 per month for dedicated access. Datacenter proxies are cheapest at $1-5 per proxy but higher risk.

My setup: I use residential proxies from Bright Data with automatic rotation every 10 requests. The cost averages $200/month for medium-scale scraping (20,000-30,000 records monthly), but my success rate stays above 95%, so it's worth it.

Free proxy warning: Don't use free public proxies for anything important. They're slow, unreliable, often compromised, and will get you blocked immediately. Trust me—I tried this early on and wasted three days troubleshooting before realizing the proxies were the problem.

Top Product Recommendations for 2026

Let me give you my honest, tested recommendations across different use cases.

For Enterprise Scale and Reliability:

Bright Data Google Maps Scraper – The premium option that justifies its price. Built-in proxy rotation, CAPTCHA solving that actually works, and infrastructure that handles thousands of concurrent requests. If you're generating leads for a sales team or running large-scale competitive analysis, this eliminates headaches.

Oxylabs Google Maps Scraper – Strong API documentation makes integration smooth. Their JavaScript rendering handles modern web apps perfectly, and customer support actually responds within hours, not days.

For No-Code Users and Small Teams:

Outscraper – My personal favorite for versatility. The free tier lets you test thoroughly, and the paid plans scale reasonably. Email and phone extraction work reliably, which is rare.

Octoparse – The visual interface makes sense immediately. The template library saves hours of configuration. Cloud scraping means you can run jobs overnight without leaving your computer on.

Leads Sniper – offers an array of web scraping tools for Google Maps, Google Search, Yellow Pages, and domains empowering businesses to scrape valuable leads and essential contact information like emails and more.,

For Developers and Technical Users:

Scrapingdog – Speed demon with excellent economics. The 100% success rate isn't marketing fluff—I've verified it across multiple projects. Great API documentation.

SerpAPI Google Maps – Clean, well-structured JSON output makes parsing trivial. The free tier is generous enough for development and testing. Reliable for app integrations.

Apify Google Maps Scraper – Actor-based customization gives you power without building from scratch. Handles anti-bot measures gracefully.

For Budget-Conscious Users:

G Maps Extractor – Free version actually works (rare in this space). Paid upgrade is affordable and unlocks bulk features.

Map Lead Scraper – Chrome extension simplicity meets practical functionality. Perfect for occasional use without subscription commitment.

Specialized Tools:

Lobstr.io – When you need marketing intelligence beyond basic contact info. Ad pixel detection is unique and valuable.

Scrap.io – 17 filtering options let you get surgical with targeting. Transparent pricing with no hidden costs.

Choose based on your primary need: scale, ease of use, cost, or specific data fields. There's genuinely no "best" tool—only the best tool for your situation.

Advanced Tips and Strategies

Here's some wisdom earned through trial, error, and occasional frustration.

Keyword strategy matters: Don't just search "restaurants." Get specific: "Italian restaurants," "pizza delivery," "fine dining steakhouse." Specific searches yield better-qualified leads and less noise.

Layer your data: Combine Google Maps data with other sources. Cross-reference with LinkedIn for decision-makers, match with email verification services for accuracy, append with business registry data for incorporation details.

Clean your data immediately: Duplicate listings happen. Phone numbers get formatted inconsistently. Addresses have typos. Build a cleaning process into your workflow—tools like OpenRefine or Python's pandas library make this manageable.

Focus on recency: Prioritize recently updated listings and fresh reviews. A business with reviews from last month is more likely to still be operating than one whose last review is from 2019.

Respect the data: Just because you can scrape doesn't mean you should spam. Use the data for legitimate outreach, provide genuine value, and respect opt-outs. Good karma aside, this keeps deliverability high and your reputation intact.

Test before scaling: Run a small batch (50-100 records), verify accuracy manually, check for missing fields, then scale up. Finding problems at 10,000 records is exponentially more painful than finding them at 100.

Common Mistakes to Avoid

Let me save you some pain by highlighting what not to do.

Scraping too aggressively: The fastest way to get blocked. Patience isn't just virtue—it's necessity.

Ignoring legal considerations: At minimum, understand your local laws and use data ethically. Don't scrape first and think later.

Not validating data: Email addresses can be outdated, phone numbers disconnected. Validate before relying on the data for critical campaigns.

Overlooking formatting inconsistencies: One business lists phone as "(555) 123-4567," another as "555.123.4567." Standardize everything before import.

Scraping without purpose: "I'll scrape everything and figure out what to do with it later" wastes time and storage. Know what you need before you start.

Using sketchy tools: If a tool promises "unlimited scraping for $5," run away. Quality infrastructure costs money to maintain.

Conclusion: Your Next Steps

Google Maps scraping isn't just a technical skill—it's a competitive advantage. While your competitors are manually building prospect lists or buying stale data, you're extracting fresh, accurate, targeted information in minutes.

Start simple. Pick one no-code tool from my recommendations, run a test scrape in your industry, and see what you get. The learning curve is gentler than you think, and the payoff shows up immediately in your lead quality and campaign targeting.

The businesses crushing it in local marketing, lead generation, and competitive intelligence aren't smarter—they're just better informed. They have the data, they use it strategically, and they move faster than competition stuck in manual mode.

Your action plan:

- Choose a tool based on your needs and budget ( Leads Sniper for beginners, Scrapingdog for developers)

- Run a small test scrape (50-100 records in your target market)

- Validate the data quality against manual checks

- Build your scraping workflow with proper delays and proxies

- Export, clean, and integrate into your existing processes

- Scale gradually while monitoring success rates

The data is out there, publicly visible, waiting to fuel your next campaign, research project, or business strategy. The only question is: will you still be copying phone numbers one at a time, or will you automate and move on to work that actually requires human creativity?

Go scrape something. Your future self will thank you.