In today's data-driven world, understanding the local business landscape is crucial for numerous industries. Google Maps, with its vast repository of information on businesses, locations, and points of interest, stands as an indispensable resource. For businesses, marketers, researchers, and developers, the ability to effectively extract and utilize this data can unlock significant competitive advantages, drive strategic decisions, and foster innovation. This comprehensive guide will equip you with the knowledge and tools necessary to navigate the complexities of Google Maps data scraping in 2026, ensuring you can harness its power responsibly and effectively.

The global location-based services market is anticipated to reach USD 123.83 billion by 2032, with a CAGR of 20.00% during 2023–2032, indicating a growing dependence on geographic data [SNS Insider, 2025]. This explosive growth underscores the critical importance of location-based data for businesses aiming to connect with their target audiences and optimize their operations.

With over 2 billion people using Google Maps monthly worldwide as of 2026 [Wiser Review, 2025], it is a prime source for this invaluable data. This article will delve into the core aspects of Google Maps data scraping, from understanding the available data and its value to the methods of extraction, crucial legal considerations, and overcoming common technical challenges.

What is Google Maps Data Scraping?

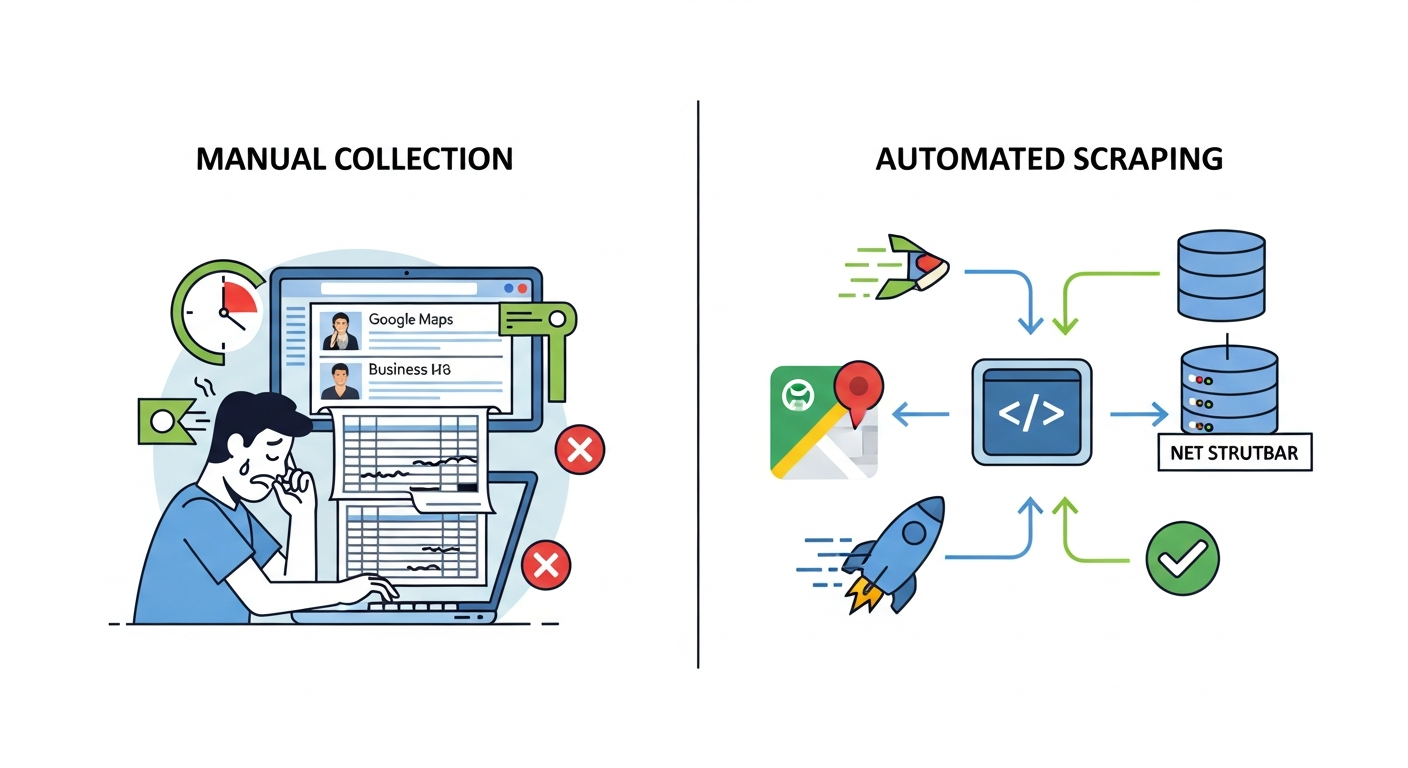

Google Maps data scraping, in essence, is the automated process of extracting information from Google Maps. This involves using software or scripts to systematically browse Google Maps listings and collect specific details about businesses, such as their names, addresses, phone numbers, websites, customer reviews, ratings, operating hours, and geographical coordinates.

Instead of manually copying and pasting information, which is time-consuming and prone to errors, data scraping automates this process, allowing for the collection of large volumes of data efficiently. Over 65% of global enterprises had adopted data extraction tools, including web scraping software, by the end of 2026 to support real-time analytics [Leads-Sniper, 2026], highlighting the widespread adoption of such methods for business intelligence.

Why Scrape Data from Google Maps in 2026?

The reasons for scraping Google Maps data are as diverse as the businesses that operate today. For marketers, it's a goldmine for lead generation, enabling them to identify potential clients based on location, industry, and customer sentiment. Researchers can utilize this data for urban planning, demographic analysis, or tracking the growth and distribution of specific business types. Developers might integrate this information into applications for navigation, local search, or business discovery tools.

Understanding the local market is crucial; nearly half of all Google searches have local intent, with about 72% of these searchers visiting a store within five miles of their location. This statistic alone demonstrates the direct link between online local search data and real-world customer behavior, making Google Maps data an indispensable asset for driving foot traffic and sales. Furthermore, enterprises adopted location analytics between 2020 and 2026 to guide expansion strategies, optimize distribution networks, and prioritize high-growth zones [Real Data API, 2025].

What This Guide Will Cover?

This guide is designed to be your definitive resource for navigating Google Maps data scraping. We will start by dissecting the types of data you can extract and understanding its inherent value. Crucially, we will address the vital legal and ethical considerations to ensure responsible data collection practices.

You will then explore various methods for scraping, ranging from user-friendly tools to advanced custom programming. We will also tackle common challenges faced during the scraping process, such as IP blocking and CAPTCHAs, and provide actionable solutions. Finally, we will cover the essential steps for processing, cleaning, and storing your collected data, preparing it for analysis and application.

Understanding the Data: What You Can Scrape from Google Maps?

Google Maps is a treasure trove of publicly available business information. By effectively scraping this platform, you can gather a wide array of data points essential for comprehensive analysis and strategic planning. The richness of this data transforms raw information into actionable insights, allowing businesses to understand their competitive landscape, identify opportunities, and connect with customers more effectively.

Key Data Points Available

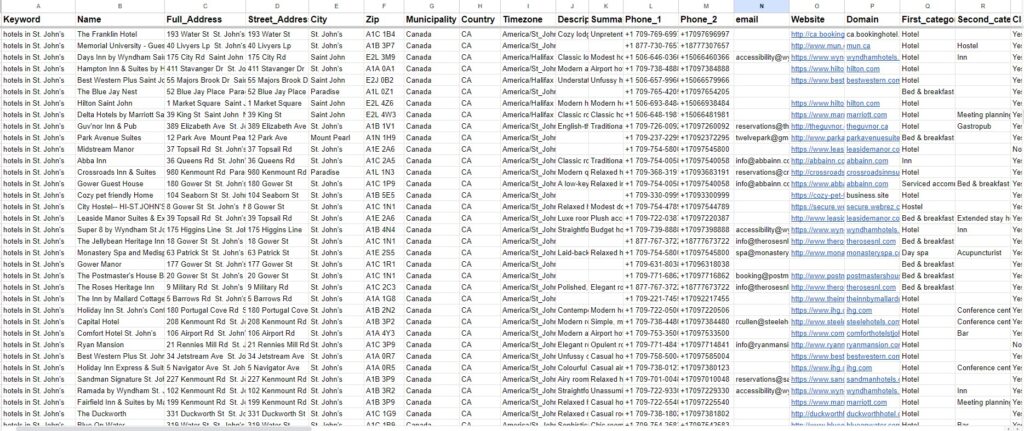

The data available through Google Maps scraping is extensive and can provide a detailed profile of any given business. Here are some of the most critical data points you can extract:

- Business Name: The official name of the establishment.

- Full Address: Including street, city, state, zip code, and country.

- Contact Information: Phone numbers and website URLs.

- Geographical Coordinates: Latitude and longitude, crucial for mapping and geospatial analysis.

- Ratings and Reviews: Customer ratings (e.g., out of 5 stars) and detailed text reviews, offering insights into customer satisfaction and sentiment.

- Operating Hours: Daily opening and closing times, including special hours for holidays.

- Business Category/Type: Classification of the business (e.g., restaurant, doctor's office, retail store).

- Business Description: A summary provided by the business owner or extracted from their website.

- Photos: Images associated with the business listing.

- Pricing Information: Where applicable (e.g., for restaurants or services).

The Value of this Data: From Raw Information to Actionable Insights

The true power of scraping Google Maps lies in transforming this raw data into actionable business intelligence. For instance, collecting addresses and coordinates allows for precise location targeting in marketing campaigns or for optimizing delivery routes. Analyzing reviews and ratings can reveal customer pain points and strengths, informing product development or customer service improvements. Identifying businesses within specific categories and geographic areas can fuel market research, helping to uncover underserved markets or competitive pressures.

Nearly half of all Google searches have local intent, with about 72% of these searchers visiting a store within five miles of their location [Outscraper, 2026], making precise local data directly tied to customer acquisition. By aggregating and analyzing this data, businesses can gain a comprehensive understanding of their market, customer preferences, and competitive landscape, leading to more informed strategic decisions and ultimately, business growth.

Legal and Ethical Considerations for Google Maps Scraping

Navigating the landscape of data scraping requires a thorough understanding of the legal and ethical boundaries. While Google Maps offers a wealth of public data, it's imperative to approach extraction with respect for Google's terms of service, data privacy regulations, and ethical best practices to avoid legal repercussions and maintain a good standing.

Understanding Google Maps Terms of Use

Google's Terms of Service explicitly govern how its services, including Google Maps, can be used. While they don't explicitly forbid all forms of data extraction, they prohibit certain activities, such as the automated collection of content that is not intended for reuse, or using Google's services in a way that infringes on intellectual property rights or violates any applicable laws.

Unauthorized scraping can lead to IP blocks, service suspensions, or even legal action. It's essential to review the latest Google Maps Platform ToS to ensure your data collection methods align with their guidelines. Over 73% of websites and businesses in the United States use the Google Maps API [Center AI, 2024], underscoring the platform's integral role, but also its controlled environment.

Data Privacy and GDPR Compliance

When scraping data that might include personal information, even if publicly available, it is crucial to consider data privacy regulations like the General Data Protection Regulation (GDPR) in Europe or the California Consumer Privacy Act (CCPA) in the United States.

These regulations impose strict rules on how personal data can be collected, processed, and stored. If your scraping activities could inadvertently capture personally identifiable information (PII), you must ensure full compliance, which may involve obtaining consent, providing opt-out mechanisms, and adhering to data minimization principles.

Public vs. Private Data: What's Fair Game?

The distinction between public and private data is critical. Data that is intentionally made public by businesses or Google (e.g., business names, addresses, public reviews) is generally considered fair game for scraping.

However, data that requires user authentication to access, or personal information that has not been explicitly shared publicly by the individual or business, should not be scraped. Respecting the intent behind data sharing—whether it's to inform the public or for personal use—is key to ethical scraping.

Best Practices for Responsible Scraping

To ensure your Google Maps data collection is both effective and compliant, adhere to the following best practices:

- Scrape Publicly Available Data Only: Do not attempt to access data behind login screens or that is not intended for broad dissemination.

- Respect Rate Limits: Avoid overwhelming Google's servers with excessive requests. Implement delays between requests and stagger your scraping activities.

- Use Proxies Wisely: Employ a rotation of IP addresses using proxies to avoid IP bans and distribute requests.

- Handle Data Responsibly: If you collect any data that could be considered personal, anonymize it or ensure it is handled in compliance with privacy laws.

- Review Google's Terms of Service Regularly: Google's policies can change, so staying informed is crucial.

- Consider the Google Maps Platform APIs: For many use cases, especially commercial applications requiring reliable and compliant data access, using the official Google Maps Platform APIs is the recommended and most robust approach.

Methods for Scraping Google Maps Data

There are several approaches to scraping data from Google Maps, each with its own advantages and disadvantages. The best method for you will depend on your technical expertise, budget, the scale of your data needs, and your comfort level with potential compliance risks.

Method 1: Using No-Code/Low-Code Google Maps Scraper Tools

For users who are not developers or prefer a simpler, more accessible solution, various no-code or low-code tools offer specialized Google Maps scraping capabilities. These platforms often provide a visual interface where you can define the data you want to extract, specify search queries (e.g., "restaurants in New York City"), and the tool handles the underlying scraping process.

Pros:

- Ease of Use: Requires minimal to no coding knowledge.

- Speed: Quick setup for basic scraping tasks.

- User-Friendly Interface: Intuitive design for defining data extraction rules.

- Pre-built Templates: Many tools offer templates for scraping Google Maps specifically.

Cons:

- Limited Flexibility: May not offer the customization needed for complex scraping scenarios.

- Cost: Often subscription-based, which can become expensive for large-scale projects.

- Potential for Detection: Some tools might use less sophisticated methods that can be more easily detected by Google's anti-scraping measures.

Popular examples of such tools include Leads Sniper, Apify, and Bright Data's Web Scraper IDE, which offer features designed to tackle Google Maps data extraction. These tools often support exporting data in formats like CSV or Excel, making it easy to integrate into existing workflows.

Method 2: Leveraging the Google Maps Platform APIs (Official Approach)

Google provides official APIs as part of the Google Maps Platform, which are designed for developers to integrate Google Maps data and services into their applications. This is the most legitimate and supported way to access Google Maps data. Key APIs include the Places API, which allows you to query information about places, including business details, reviews, and ratings.

Pros:

- Legitimate and Supported: Fully compliant with Google's terms of service.

- Reliable and Stable: Designed for consistent data delivery.

- Rich Data Features: Access to a wide range of data points and functionalities.

- Scalable: Can handle large-scale data requests effectively.

Cons:

- Cost: The Google Maps Platform operates on a pay-as-you-go model. While there is a generous free tier, high-volume usage can become expensive.

- Requires Development Skills: Implementation requires programming knowledge to interact with the APIs.

- Data Structure Limitations: You are limited to the data points and search capabilities provided by the APIs; you cannot scrape arbitrary elements from the Google Maps interface.

Using the APIs involves obtaining API keys, making requests to specific endpoints, and parsing the returned JSON data. This method is ideal for businesses requiring a continuous, reliable, and compliant data stream.

Method 3: Building a Custom Scraper with Python (Advanced & Flexible)

For maximum control and flexibility, building a custom scraper using a programming language like Python is the most powerful option. Python boasts a rich ecosystem of libraries that facilitate web scraping, such as Requests for making HTTP requests, Beautiful Soup for parsing HTML, and Playwright or Selenium for interacting with dynamic, JavaScript-rendered content.

Pros:

- Ultimate Flexibility: Complete control over what data to scrape, how to scrape it, and how to process it.

- Cost-Effective at Scale: Once developed, running custom scripts can be cheaper than API usage for very large datasets.

- Customizable Logic: Can implement advanced logic for handling anti-scraping measures, data cleaning, and more.

- Integration Capabilities: Easily integrates with other Python libraries for data analysis, machine learning, and database storage.

Cons:

- Requires Advanced Programming Skills: Significant expertise in Python and web scraping techniques is necessary.

- Time-Consuming Development: Building and maintaining a robust scraper takes considerable effort.

- Maintenance Overhead: Google frequently updates its website structure, requiring ongoing scraper maintenance.

- Legal and Ethical Risks: If not handled carefully, custom scrapers can violate Google's ToS and lead to IP bans or legal issues.

When building a Python scraper for Google Maps, you'll likely need to use tools that can handle JavaScript rendering, as much of the content is loaded dynamically. Libraries like Playwright are particularly effective for this, allowing you to automate a real browser instance.

Overcoming Common Google Maps Scraping Challenges

Scraping Google Maps is not without its hurdles. Google employs sophisticated measures to prevent automated scraping, and navigating these can be complex. Understanding these challenges and implementing appropriate solutions is key to successful and sustainable data collection.

IP Blocking and Rate Limiting

One of the most common obstacles is IP blocking and rate limiting. Google detects a high volume of requests from a single IP address and may temporarily or permanently block it. This is a primary reason why implementing robust proxy strategies is crucial for any serious scraping operation.

- Solution: Proxies: Utilize a diverse pool of residential or rotating proxies. Residential proxies are IP addresses assigned by Internet Service Providers to homeowners and are generally trusted by websites. Rotating proxies change your IP address with each request or after a set interval, making it much harder for Google to identify and block a single source.

- Solution: Throttling: Introduce deliberate delays between your requests. Instead of sending hundreds of requests per second, slow down to a few requests per minute, mimicking human browsing behavior.

- Solution: User-Agent Rotation: Regularly change the User-Agent string in your requests to mimic different browsers and devices.

Handling CAPTCHAs and Anti-Scraping Measures

Google often deploys CAPTCHAs (Completely Automated Public Turing test to tell Computers and Humans Apart) to verify that users are not bots. These can halt your scraping process instantly.

- Solution: CAPTCHA Solving Services: Integrate with third-party CAPTCHA solving services (e.g., 2Captcha, Anti-Captcha). These services use human workers or advanced AI to solve CAPTCHAs, returning a token that your scraper can use to bypass the challenge.

- Solution: Browser Automation Tools: Tools like Playwright or Selenium, when configured correctly and used with proxies and user-agent rotation, can sometimes bypass simpler anti-bot measures by simulating human interaction more effectively.

- Solution: Data Extraction APIs: For critical applications, consider using specialized data extraction services or APIs that already have built-in mechanisms for handling CAPTCHAs and other anti-scraping measures.

Dealing with Dynamic Content and JavaScript-Rendered Pages

Modern websites, including Google Maps, heavily rely on JavaScript to load content dynamically. Standard HTTP request libraries like Requests may not be sufficient as they don't execute JavaScript.

- Solution: Headless Browsers: Use tools that can control a real web browser in the background, executing JavaScript and rendering the full page before you extract data.

PlaywrightandSeleniumare excellent choices for this. They allow you to interact with web elements as a human would. - Solution: Network Analysis: Sometimes, inspecting the network requests made by the browser using developer tools in Chrome or Firefox can reveal the underlying API calls Google Maps uses to fetch data. If these APIs are accessible and not overly protected, you might be able to query them directly, which is often more efficient than full-page rendering.

Website Structure Changes and Scraper Maintenance

Google frequently updates its website's HTML structure and underlying code. When this happens, your scraper, which is built to parse a specific structure, will break.

- Solution: Robust Parsing: Design your scraper to be resilient. Use robust selectors (e.g., IDs, carefully chosen CSS selectors) and implement error handling to gracefully manage unexpected changes. Avoid relying on fragile selectors that are likely to break.

- Solution: Monitoring and Alerting: Set up monitoring systems to detect when your scraper fails. Implement logging to record errors and the specific pages or requests that caused issues.

- Solution: Modular Design: Build your scraper in a modular way, separating different functionalities (e.g., fetching pages, parsing data, saving data). This makes it easier to update specific parts of the scraper without affecting the entire system. Regular testing and updates are a continuous requirement for custom scrapers.

Processing, Cleaning, and Storing Your Scraped Google Maps Data

Once you have successfully scraped data from Google Maps, the next crucial steps involve cleaning, processing, and storing it effectively. Raw scraped data is often messy and inconsistent, requiring significant attention to ensure its usability and accuracy for analysis.

Data Cleaning and Validation

The data collected from scraping is rarely perfect. It often contains duplicates, inconsistencies, missing values, and incorrect formatting.

- Deduplication: Identify and remove duplicate entries. This is especially important if your scraping process might revisit the same locations or if search results overlap.

- Standardization: Ensure data formats are consistent. For example, addresses should follow a uniform structure, phone numbers should be in a standardized format (e.g., with country codes), and ratings should be consistently represented.

- Handling Missing Data: Decide how to manage missing fields. You might choose to fill them with default values, mark them as unknown, or remove records with critical missing information.

- Text Cleaning: For reviews or business descriptions, remove HTML tags, special characters, and normalize text (e.g., converting to lowercase).

- Validation: Implement checks to ensure the data makes sense. For example, verify that coordinates fall within expected geographical boundaries or that phone numbers have a plausible format.

Data Storage Options

The choice of storage depends on the volume of data, your intended use, and your technical infrastructure.

- CSV (Comma Separated Values): A simple, widely compatible format suitable for smaller datasets. Easily opened in spreadsheet software like Excel.

- Excel: Ideal for smaller to medium-sized datasets, offering features for sorting, filtering, and basic analysis directly within the spreadsheet.

- JSON (JavaScript Object Notation): A flexible, human-readable format that is excellent for structured data and commonly used in web APIs. It's well-suited for storing complex nested data structures, like reviews with multiple comments.

- Databases: For larger datasets, consider using databases.

- SQL Databases (e.g., PostgreSQL, MySQL): Excellent for structured data, offering powerful querying capabilities and strong data integrity.

- NoSQL Databases (e.g., MongoDB): More flexible for unstructured or semi-structured data, and can scale horizontally more easily.

Data Enrichment and Geocoding

After cleaning, you can enrich your data to add further value. Geocoding, the process of converting addresses into geographic coordinates (latitude and longitude) or vice-versa, is often already part of the scraped data but can also be performed if missing.

- Geocoding: If your scraped data doesn't include coordinates, you can use services like the Google Geocoding API to add them. This is essential for any spatial analysis.

- External Data Integration: Combine your Google Maps data with other datasets, such as demographic information, property records, or industry-specific data, to create a more comprehensive profile for each location.

Preparing Data for Analysis (AI-Ready Data Pipelines)

For advanced analytics, machine learning, or AI applications, your data needs to be meticulously prepared. This involves creating what is often referred to as an "AI-ready data pipeline."

- Feature Engineering: Create new, meaningful features from existing data. For example, you could calculate the average rating of businesses in a specific neighborhood or derive the density of businesses of a certain type within a radius.

- Data Formatting for ML Models: Ensure your data is in a format compatible with your chosen machine learning libraries (e.g., NumPy arrays, Pandas DataFrames).

- Data Quality Assurance: Implement rigorous quality checks throughout the pipeline to ensure that the data fed into AI models is accurate and reliable, which is crucial for the effectiveness of the models.

Advanced Applications and Analysis of Scraped Google Maps Data

The true power of scraped Google Maps data is realized when it's applied to solve complex business problems and drive strategic decisions. Beyond basic lead generation, this data can inform sophisticated market research, enhance sales strategies, and provide deep insights into customer behavior and market trends.

Market Research and Location Intelligence

Scraped Google Maps data is invaluable for understanding market dynamics. Businesses can identify the concentration of competitors in specific areas, assess the saturation of particular industries, or pinpoint geographic regions that are underserved by certain types of businesses.

This location intelligence is fundamental for informed decisions regarding market entry, expansion, or targeted marketing campaigns. By analyzing the density and types of businesses, companies can gain a significant competitive edge. Enterprises adopted location analytics between 2020 and 2026 to guide expansion strategies, optimize distribution networks, and prioritize high-growth zones [Real Data API, 2025].

Lead Generation and Sales Enablement

For sales and marketing teams, scraped Google Maps data can be a powerful tool for identifying high-potential leads. By filtering businesses based on criteria such as industry, location, size (inferred from reviews or description), or even customer sentiment derived from reviews, sales teams can build targeted prospect lists.

This precision allows for more effective outreach, personalized sales pitches, and ultimately, a higher conversion rate. The direct link between local search intent and store visits makes this data exceptionally potent for driving real-world engagement.

Customer Sentiment Analysis

Analyzing customer reviews scraped from Google Maps offers deep insights into public perception of businesses and their offerings. Natural Language Processing (NLP) techniques can be applied to categorize reviews by sentiment (positive, negative, neutral), identify common themes and keywords, and detect emerging trends in customer feedback.

This can help businesses understand what customers love, what they dislike, and where improvements can be made. This detailed understanding of customer sentiment can guide product development, service improvements, and overall brand strategy.

Geospatial Analysis and Visualization

With latitude and longitude coordinates, the scraped data can be visualized on interactive maps. This allows for powerful geospatial analysis. Businesses can map out their customer base, visualize competitor locations, identify optimal sites for new branches, or analyze the spatial distribution of demand for their products or services. Tools like Tableau, Power BI, or Python libraries such as Folium and Geo pandas can be used to create compelling visual representations of this data, making complex spatial patterns easily understandable.

Conclusion: Navigating the Future of Google Maps Data Extraction

Scraping data from Google Maps in [Year] offers an unparalleled opportunity for businesses and researchers to gain critical insights into the local business landscape. From understanding customer sentiment through reviews to identifying strategic market opportunities based on location and business type, the potential applications are vast. We have explored the types of data available, the crucial legal and ethical considerations, and the various methods for extraction, including no-code tools, official APIs, and custom Python scrapers.

The journey of data collection is not just about extraction; it extends to meticulous cleaning, processing, and intelligent storage. As the digital world continues to evolve, so too will the methods and challenges of data scraping. By staying informed about Google's terms of service, prioritizing ethical data handling, and employing robust technical solutions, you can confidently and effectively leverage the wealth of information available on Google Maps.

Remember, responsible data collection is not just a legal requirement but a fundamental aspect of building trust and long-term success in the data-driven era. The global location-based services market's projected growth [SNS Insider, 2025] signifies an increasing reliance on this data, making proficiency in its extraction a valuable asset for years to come.